What is Event Management System?

Event Management System as the name suggests, is a scalable system built around events which processes and analyzes them and makes decisions on the data. Events can be categorized in the following categories: 4. User actions performed on the app (clicks, scrolls, views, etc.) 5. Database signals (on create, update, delete) 6. Events from backend services (for certain flows like recharge, post like)

Need for an Event Management System

Why do we need to build such a system when all of the data is available in big data systems. There are certain limitations and other factors that we have to keep in mind before deploying such a system.

1. Real time data:- Big data systems hold all the data, but the problem with them is that they are not real time updated hence making them eventually consistent. Practically in most of the cases, decisions need to be made real time or near real time. Hence big data systems are not reliable there.

2. Cost efficiency:- While scanning through a big amount of data comes heavy costing. In event management system data is segregated and stored in desired format hence reducing the cost.

3. Scalability:- As events grow in size and complexity, managing them manually becomes impractical. An EMS can easily scale to handle thousands of attendees, multiple sessions, and complex logistics without a decline in service quality.

4. Adaptability: Whether it's a small workshop or a large-scale conference, EMS can be customized to fit the needs of different types and sizes of events.

5. Coordination: It provides a central platform where all stakeholders (organizers, attendees, vendors, etc.) can access up-to-date information, ensuring everyone is on the same page and can coordinate more effectively.

6. Insight Generation: EMS enables the collection of valuable data such as attendance numbers, engagement levels, feedback, and other metrics. This data can be analyzed to gain insights into what works and what doesn’t, allowing for continuous improvement.

7. Targeted Marketing: By understanding attendee behavior and preferences, organizations can create targeted marketing campaigns, resulting in better outreach and increased participation.

8. Feedback Collection: After the event, EMS can facilitate feedback collection, allowing organizers to gauge attendee satisfaction and gather suggestions for future improvements.

9. Reporting and Analytics: Comprehensive reports can be generated to analyze various aspects of the event, such as financial performance, attendee demographics, and engagement levels, helping in strategic planning for future events.

Building the Foundation: Tools and Technologies

The backbone of any successful Event Management System (EMS) lies in the technologies and tools used to construct it. For our EMS, we leveraged a suite of powerful tools that allowed us to handle high volumes of data, ensure real-time processing, and maintain system scalability and reliability.

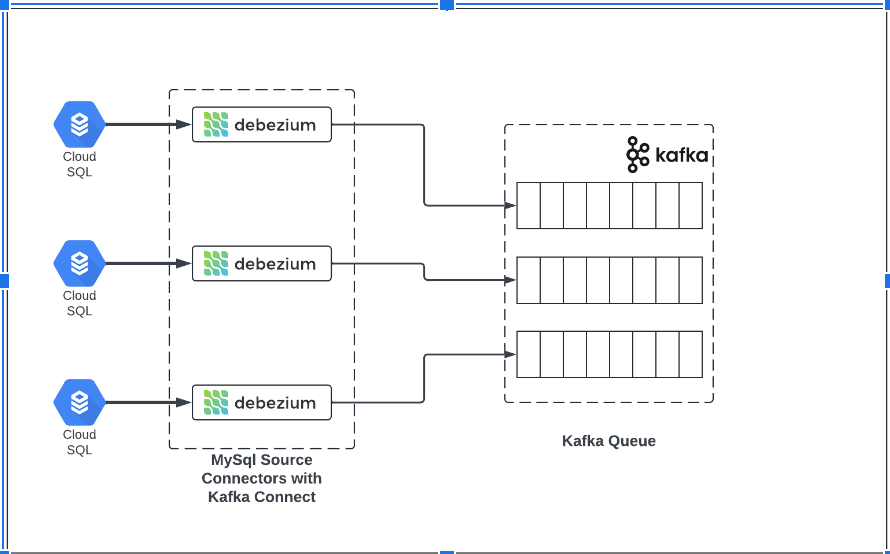

1. Real-Time Data Streaming with Debezium and Kafka Connect:- To ensure that our EMS could handle data in real time, we implemented Debezium along with Kafka Connect. Debezium is an open-source distributed platform that captures database changes. It allowed us to pull data changes from various databases as they happened, ensuring that our event system had the latest information at all times. Kafka Connect acted as a reliable bridge between Debezium and Kafka, making sure that the data was seamlessly transferred into our system.

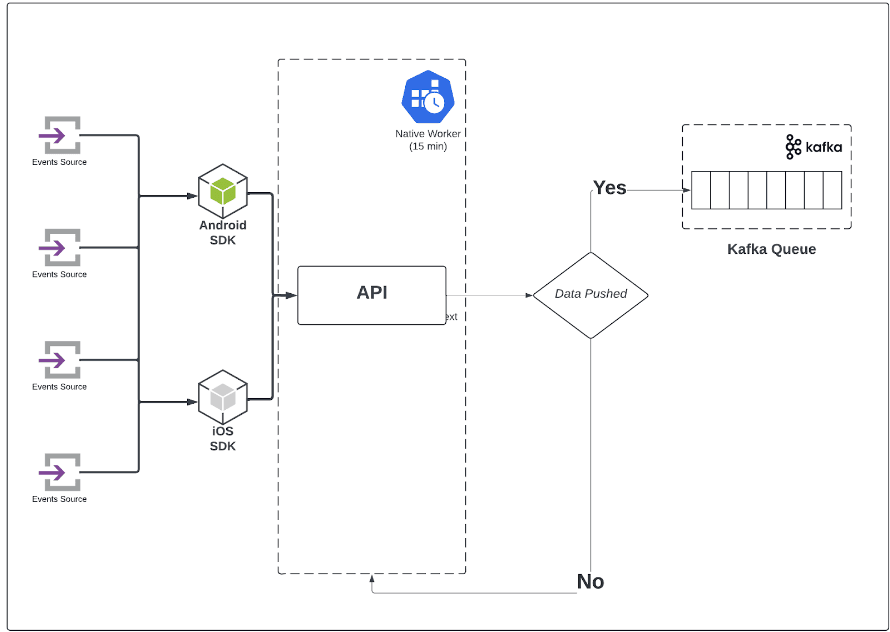

2. SDK Integration for Frontend Data Collection:- Understanding user interactions is crucial for improving user experience and making informed business decisions. To achieve this, we developed a Software Development Kit (SDK) that integrated directly with our frontend applications. This SDK was responsible for capturing various user interactions such as clicks, scrolls, and other behaviors. By sending these interactions to our backend, we were able to gather valuable analytics and insights into user behavior.

3. Event Processing with Kafka Topics:- Kafka's robust messaging capabilities made it an ideal choice for handling the multitude of events generated by our applications. We used Kafka topics to collect and categorize events from various backend services. This architecture enabled us to process events asynchronously, ensuring that our system remained responsive and could scale to handle large volumes of data without performance degradation.

4. Data Storage Solutions: S3, MinIO, and MySQL

- Primary Storage with S3:- Amazon S3 served as our primary storage solution. Its scalability, durability, and cost-effectiveness made it perfect for storing the vast amounts of data generated by our event system. S3 allowed us to store and retrieve any amount of data from anywhere, providing the flexibility needed for our growing data needs.

- Secondary Storage with MinIO: As a complementary storage solution, we used MinIO, an open-source, high-performance, distributed object storage system. MinIO acted as a failover and redundancy storage option, ensuring that our data remained safe and accessible even in the event of primary storage failure.

- User Data Management with MySQL: For managing user-specific data, we chose MySQL due to its reliability, ease of use, and support for complex queries. MySQL allowed us to store structured user data efficiently, making it easy to query and retrieve specific user information when needed.

Flexible Event Storage with Elasticsearch:- Given the diverse nature of the events and the need for flexible querying, we used Elasticsearch to store our event data. Elasticsearch’s schema-less nature allowed us to store events in any format, enabling us to capture and analyze a wide variety of data without the constraints of a rigid schema. Additionally, Elasticsearch provided powerful search and analytics capabilities, making it easier to sift through large datasets and extract meaningful insights.

Data Analysis with Athena:- To query the data stored in S3 efficiently, we utilized Amazon Athena. Athena is a serverless, interactive query service that makes it easy to analyze data directly in S3 using standard SQL. By leveraging Athena, we were able to perform ad-hoc analyses, generate reports, and gain insights from our event data without the need for complex data pipelines or additional infrastructure.

By carefully selecting and integrating these tools and technologies, we built a robust, scalable, and efficient Event Management System. Each component played a critical role in ensuring that our system could handle real-time data, provide deep insights into user behavior, and support the ever-growing demands of our applications. The choice of these technologies allowed us to create a seamless experience for both users and administrators, driving engagement and enhancing the overall performance of our applications.

Challenges in Designing Event Management Systems

Data Format and Consistency:- One of the primary challenges was managing the various formats of data coming into the system. Events generated by different services often had different structures, making it difficult to store and process them consistently. Ensuring data consistency across these varying formats was critical for maintaining the integrity and reliability of our system.

SOLUTION:- We implemented a data transformation layer to standardize event formats before ingestion. This layer ensured that all incoming data adhered to a common schema, simplifying storage and processing. Additionally, we used schema validation tools to enforce consistency and detect discrepancies early.

State Management and Data Integrity:- Maintaining the correct state of data in real-time systems is crucial, especially when the same data is used across multiple growth loops. Events could be updated, deleted, or modified, and the system had to accurately reflect these changes to prevent inconsistencies.

SOLUTION:- To manage state effectively, we used a combination of event sourcing and state synchronization techniques. By storing events as a sequence of changes, we could reconstruct the current state of any entity at any point in time. This approach also made it easier to replay events and correct any inconsistencies that might have occurred. Data Transformations and Processing:- Events often required transformation before they could be used for analytics, reporting, or other business processes. These transformations needed to be efficient to avoid bottlenecks, especially as the volume of events grew.

SOLUTION:- We used stream processing tools, such as Apache Kafka Streams, to handle data transformations in real-time. By processing events as they arrived, we could perform necessary transformations on-the-fly and pass the processed data to downstream systems without significant delays. This approach minimized latency and ensured that transformed data was immediately available for use. Handling Data in Multiple Growth Loops:- Using the same data across multiple growth loops posed a challenge in terms of data management and consistency. Each loop might require data in a different format or state, and changes in one loop could impact others.

SOLUTION:- We implemented a modular architecture that allowed different growth loops to access and process data independently. By decoupling data processing logic from the core event handling, we ensured that each loop could operate without interfering with others. Data versioning and tagging mechanisms were also used to track changes and ensure that each loop had access to the correct version of the data.

Ensuring Low Latency for Real-Time Analytics:- Providing low-latency access to data was essential for real-time analytics and other services that relied on up-to-the-minute information. High latency could lead to outdated insights and hinder decision-making processes.

SOLUTION:- To achieve low latency, we optimized our data pipeline from end to end. This included using in-memory databases and caches, such as Redis, for quick data retrieval, and optimizing Kafka topic configurations to reduce processing delays. We also employed techniques like micro-batching and data partitioning to balance the load and ensure that data processing remained efficient even as volumes increased.

Architecture and Implementation

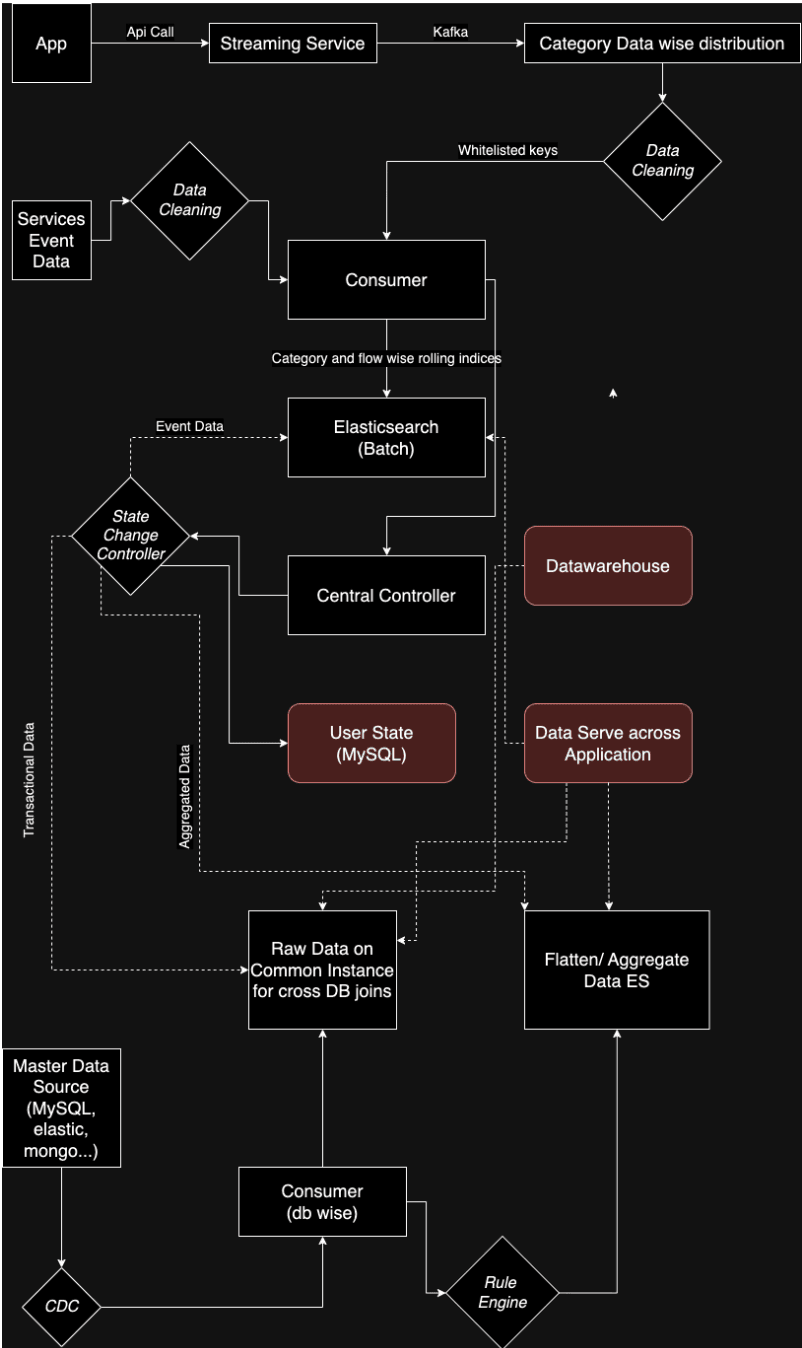

The architecture of our Event Management System (EMS) was designed to efficiently handle high volumes of data, ensure real-time processing, and maintain system scalability and reliability. Below, we outline the key components of the architecture and their roles in the overall system. Data Ingestion and Distribution

Streaming Service and API Calls:- The EMS architecture begins with data ingestion through API calls from various applications and events in kafka topics. These calls are handled by a centralized Streaming Service that pushes data into Kafka, a robust event streaming platform. Kafka ensures that data is collected efficiently and can be processed asynchronously, providing the backbone for real-time data flow.

Category Data-Wise Distribution: Once data is in Kafka, it is categorized and distributed based on predefined categories. This categorization ensures that data is directed to the appropriate processing streams, optimizing the processing load and ensuring that each type of data is handled according to its specific requirements. Data Cleaning and Processing

Data Cleaning: Both in the data ingestion phase and as it moves through various processing stages, data cleaning is crucial. We implemented data cleaning steps to filter out noise, validate formats, and ensure only whitelisted keys are processed. This step maintains data integrity and quality, which is vital for reliable analytics.

Consumers and Elasticsearch: The cleaned data is then consumed by dedicated Consumers which feed it into Elasticsearch. Elasticsearch allows us to handle data in batches, storing category and flow-wise rolling indices. This approach enables efficient querying and retrieval of event data, making it suitable for real-time analytics and search functionalities. State Management and Control

State Change Controller and Central Controller: This is the brain of EMS. Maintaining accurate state information is essential for our EMS. A State Change Controller monitors and updates the state of event data, ensuring that the system reflects the most current information. The Central Controller acts as a hub, coordinating between different components such as Elasticsearch, MySQL (for user state management), and the Data Warehouse. This coordination ensures seamless data flow and integration across the system.

Data Storage and Aggregation

User State Management with MySQL: To manage user-specific information, we use MySQL databases. These databases store user state data, which is critical for personalized experiences and targeted engagement. MySQL's relational capabilities make it ideal for managing structured data efficiently.

Data Warehousing and Aggregation: For broader data analysis and reporting, we use a Data Warehouse. The Data Warehouse aggregates data from various sources, including batch data from Elasticsearch and user state information from MySQL. Aggregated data is further processed for analytics, enabling comprehensive insights into event performance and user behavior. Flattening and Aggregating Data in Elasticsearch: To support complex querying and data analysis needs, we implemented data flattening and aggregation in Elasticsearch. This process consolidates data from different categories and states into a unified format, making it easier to perform large-scale analytics and generate actionable insights.

Cross-Database Operations and Rule Engine

Raw Data on Common Instance: To perform complex operations such as cross-database joins, we store raw data on a common instance. This setup facilitates seamless data integration and ensures that comprehensive analytics can be performed across different data sources.

Rule Engine: A key feature of our EMS is the Rule Engine, which allows us to define and apply business rules dynamically. These rules govern data processing, event handling, and decision-making, ensuring that the system adapts to changing business requirements without extensive reconfiguration.

Impact and Results

The implementation of our Event Management System (EMS) brought about significant improvements across various key performance metrics, demonstrating the system's effectiveness in optimizing data management and enhancing user engagement. Here are some of the notable outcomes:

Increased Click Rates by 80%:- With the EMS in place, our click-through rates saw a remarkable 80% increase. This boost can be attributed to the system's ability to provide real-time, relevant data that powers personalized user experiences. By leveraging accurate and timely event data, we could better understand user behavior and tailor content and interactions to meet their specific needs and preferences.

Enhanced User Engagement:- The number of average services used by a user doubled, indicating a significant rise in user engagement. This improvement reflects the effectiveness of our system in driving user interaction. By utilizing the EMS to understand user behavior and preferences, we were able to design and deliver more engaging and relevant services, encouraging users to explore more of what we offer.

Boost in Cross-Selling Opportunities:- Cross-selling of products and services within the app increased by 35%. The EMS enabled us to identify and act on cross-selling opportunities more effectively by providing a comprehensive view of user activities and preferences. By offering the right products and services to users at the right time, we successfully increased the uptake of complementary offerings, driving additional revenue.

Significant Cost Reduction in Data Querying:- One of the standout benefits of our EMS was the 70% reduction in the costs associated with data querying. The efficient data storage and processing capabilities of the EMS, combined with the use of scalable technologies such as Elasticsearch and optimized data pipelines, enabled us to minimize the resources required for data queries. This cost reduction allows for more sustainable scaling of our data operations as our business grows.

Improved Data Accuracy in Real-Time Analytics:- Data accuracy in real-time analytics improved by 98%, underscoring the reliability and precision of our EMS. Accurate data is crucial for making informed business decisions, and the high accuracy achieved through our EMS means we can trust the insights derived from our analytics. This improvement not only boosts confidence in our data but also enhances our ability to respond swiftly to market changes and user needs.

Future Enhancements and Goals Building on the success of our current Event Management System (EMS), we are focused on enhancing its capabilities to ensure continued stability, reliability, and scalability. Our future goals aim to solidify our system's robustness, cater to evolving business needs, and drive even more substantial growth. Here’s what we plan to focus on:

Enhancing System Stability and Reliability:- To ensure the system remains operational at all times, we are committed to improving its stability. Our goal is to implement comprehensive failover and fallback mechanisms, ensuring that no events are missed even in the event of unexpected outages or disruptions. By incorporating redundant pathways and backup systems, we aim to minimize downtime and provide continuous service availability. This approach will also involve:

Automated Failover Systems: Establishing automated failover protocols that instantly switch to backup systems in case of a primary system failure, thus maintaining seamless operation. Resilient Event Handling: Implementing mechanisms to capture and store events temporarily if any part of the system fails, ensuring that no critical user interactions are lost.

Ensuring No Event is Missed:- A critical goal for the future is to guarantee that every user event is captured and processed accurately. Missing events can lead to lost insights and a breakdown in the feedback loop that drives user engagement and business decisions. To achieve this, we plan to: Enhanced Data Integrity Checks: Implement real-time data validation and integrity checks throughout the data pipeline to ensure that all events are captured correctly and consistently.

Audit and Logging Systems: Develop comprehensive auditing and logging mechanisms to track the flow of data through the system, making it easier to identify and rectify any issues promptly. Implementing Complex Logic for Growth Optimization:- As our app continues to grow, so do the complexities of the user interactions and the business rules governing them. We aim to integrate more complex logic into our EMS to support advanced growth strategies. This includes: Dynamic Rule Engine Expansion: Expanding the capabilities of our rule engine to handle more sophisticated decision-making processes, enabling the system to adapt to complex scenarios dynamically.

Personalized User Journeys: Utilizing real-time data to create highly personalized user journeys, tailored to individual preferences and behaviors. This will involve more granular user segmentation and targeted content delivery.

Continuous Optimization for Scalability:- Scalability remains a top priority as we anticipate growing volumes of data and user interactions. Our focus will be on optimizing system performance to handle increasing loads without compromising speed or accuracy. Future efforts will include:

Scalable Infrastructure: Continuing to leverage cloud-based infrastructure that can scale on demand, ensuring that the system can handle peak loads efficiently. Performance Monitoring and Optimization: Implementing advanced monitoring tools to track system performance metrics and proactively identify areas for improvement.

Conclusion

The implementation of our Event Management System has proven to be a game-changer, delivering substantial improvements in user engagement, operational efficiency, and data-driven decision-making. By optimizing how we handle and process data, we have unlocked new growth opportunities, reduced costs, and positioned ourselves to better meet the evolving demands of our users. As we continue to refine and expand our EMS, we anticipate even greater results, further solidifying our competitive edge. As we look to the future, our focus will be on further enhancing the stability, reliability, and scalability of our system. By ensuring that no event is missed, implementing robust failover mechanisms, and developing more sophisticated logic for user interactions, we are positioning ourselves to meet the evolving needs of our users and the business. These enhancements will enable us to deliver even more personalized experiences, maximize user engagement, and maintain a competitive edge in the market. Our Event Management System is not just a tool for data collection; it's a cornerstone of our growth strategy. By continually refining and expanding its capabilities, we are committed to driving meaningful results, fostering innovation, and achieving sustainable growth. The future holds exciting possibilities, and with our EMS as the foundation, we are well-equipped to turn those possibilities into reality.